Stack内存管理

介绍

要启动go协程,必须要为其准备栈空间,用来存储函数内变量、执行函数调用等。在go协程退出后,其占用的stack内存也会随之一同释放。

实际应用中协程的启动、退出可能会比较频繁,runtime必须要做点什么来保证启动、销毁协程的代价尽量小。而申请、释放stack空间所需内存则是一个比较大的开销,因此,go runtime采用了stack cache pool来缓存一定数量的stack memory。申请时从stack cache pool分配,释放时首先归还给stack cache pool。

主要思想

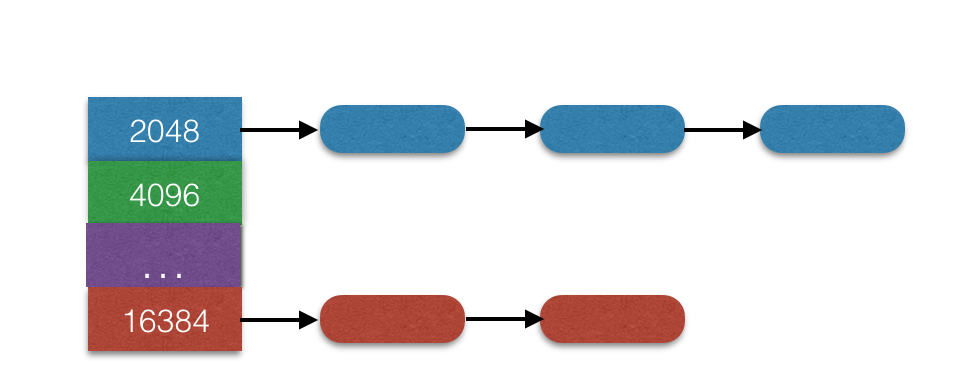

stack cache pool的主要思想是按照固定大小划分成多级:每级别其实是一个空闲stack队列:最小的stack size是_FixedStack=2KB。级别之间的大小按照2倍的关系递增。

同时为了效率考虑,除了全局的stack cache pool外,每个线程m还有自己的stack cache。这样,线程在分配的时候可以优先从自己的stack cache中查找,提高效率。

初始时,stack cache pool为空:当有stack分配需求时,首先向os申请一块较大的内存,将其按照该级别的stack大小切割后放在空闲stack列表上,然后再从该空闲stack列表上分配。主要结构如下:

数据结构

type mspan struct {

next *mspan // in a span linked list

prev *mspan // in a span linked list

start pageID // starting page number

npages uintptr // number of pages in span

freelist gclinkptr // list of free objects

// sweep generation:

// if sweepgen == h->sweepgen - 2, the span needs sweeping

// if sweepgen == h->sweepgen - 1, the span is currently being swept

// if sweepgen == h->sweepgen, the span is swept and ready to use

// h->sweepgen is incremented by 2 after every GC

sweepgen uint32

divMul uint32 // for divide by elemsize - divMagic.mul

ref uint16 // capacity - number of objects in freelist

sizeclass uint8 // size class

incache bool // being used by an mcache

state uint8 // mspaninuse etc

needzero uint8 // needs to be zeroed before allocation

divShift uint8 // for divide by elemsize - divMagic.shift

divShift2 uint8 // for divide by elemsize - divMagic.shift2

elemsize uintptr // computed from sizeclass or from npages

unusedsince int64 // first time spotted by gc in mspanfree state

npreleased uintptr // number of pages released to the os

limit uintptr // end of data in span

speciallock mutex // guards specials list

specials *special // linked list of special records sorted by offset.

baseMask uintptr // if non-0, elemsize is a power of 2, & this will get object allocation base

}

mspan是在go内存管理模块机器核心的一个数据结构,我们会在后面的章节中自习描述该结构的含义。

var stackpoolmu mutex

var stackpool [_NumStackOrders]mspan

主要流程

stackinit()

go程序在启动时首先会执行go runtime的初始化,此时会调用stackinit()来初始化stack cache pool。

func stackinit() {

if _StackCacheSize&_PageMask != 0 {

throw("cache size must be a multiple of page size")

}

for i := range stackpool {

mSpanList_Init(&stackpool[i])

}

mSpanList_Init(&stackFreeQueue)

}

初始化做的事情非常简单:初始化每个stack size级别的链表为空即可。

stackalloc(n uint32)

func stackalloc(n uint32) (stack, []stkbar) {

......

if stackCache != 0 && n < _FixedStack<<_NumStackOrders && n < _StackCacheSize {

order := uint8(0)

n2 := n

for n2 > _FixedStack {

order++

n2 >>= 1

}

var x gclinkptr

c := thisg.m.mcache

if c == nil || thisg.m.preemptoff != "" || thisg.m.helpgc != 0 {

// 线程本地stack cache为空

// 直接从全局stack cache pool分配

lock(&stackpoolmu)

x = stackpoolalloc(order)

unlock(&stackpoolmu)

} else {

// 直接从线程本地缓存分配

x = c.stackcache[order].list

if x.ptr() == nil {

// 如果线程本地分配完,则从全局stack cache pool分配一批给线程

// 再从线程的local stack cache分配

stackcacherefill(c, order)

x = c.stackcache[order].list

}

c.stackcache[order].list = x.ptr().next

c.stackcache[order].size -= uintptr(n)

}

v = (unsafe.Pointer)(x)

} else {

s := mHeap_AllocStack(&mheap_, round(uintptr(n), _PageSize)>>_PageShift)

if s == nil {

throw("out of memory")

}

v = (unsafe.Pointer)(s.start << _PageShift)

}

......

}

这里的分配算法思想是:

- 如果线程m的local stack cache为空,则直接从全局的stack cache pool中分配;

- 否则先从线程的local stack cache中分配,如果无法分配除,则需要先给线程分配出一批stack,赋给该线程,再从线程local stack cache中再分配。

stackpoolalloc(order uint8)

// Allocates a stack from the free pool. Must be called with

// stackpoolmu held.

func stackpoolalloc(order uint8) gclinkptr {

list := &stackpool[order]

s := list.next

// 如果该队列为空了,需要向heap管理模块再申请

// 分配的结果为一个mspan结构

if s == list {

s = mHeap_AllocStack(&mheap_, _StackCacheSize>>_PageShift)

if s == nil {

throw("out of memory")

}

if s.ref != 0 {

throw("bad ref")

}

if s.freelist.ptr() != nil {

throw("bad freelist")

}

// 将申请得到的空间mspan按照该级别的stack大小拆分成多项(gclinkptr)并添加到mspan的空闲链表(mspan.freelist)中

for i := uintptr(0); i < _StackCacheSize; i += _FixedStack << order {

x := gclinkptr(uintptr(s.start)<<_PageShift + i)

x.ptr().next = s.freelist

s.freelist = x

}

// 将分配的mspan插入到pool的空闲span链表

mSpanList_Insert(list, s)

}

x := s.freelist

if x.ptr() == nil {

throw("span has no free stacks")

}

s.freelist = x.ptr().next

s.ref++

// 如果分配导致s(mspan)的空闲链表变空

// 将其从pool的空闲span链表中摘除

if s.freelist.ptr() == nil {

// all stacks in s are allocated.

mSpanList_Remove(s)

}

return x

}

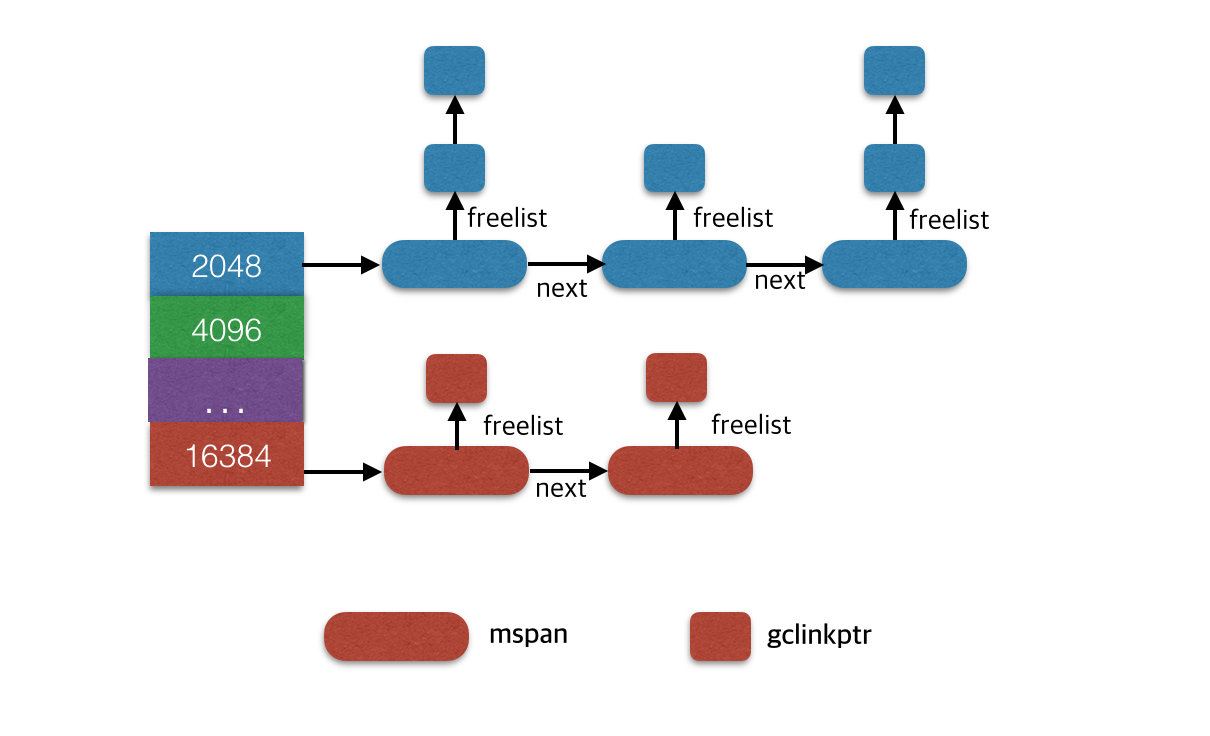

分配后的系统stack cache pool结构如下:

stackfree()

func stackfree(stk stack, n uintptr) {

......

if stackCache != 0 && n < _FixedStack<<_NumStackOrders && n < _StackCacheSize {

order := uint8(0)

n2 := n

for n2 > _FixedStack {

order++

n2 >>= 1

}

x := gclinkptr(v)

c := gp.m.mcache

// 如果禁用了m local stack cache,直接归还至全局stack cache pool

if c == nil || gp.m.preemptoff != "" || gp.m.helpgc != 0 {

lock(&stackpoolmu)

stackpoolfree(x, order)

unlock(&stackpoolmu)

} else {

// 如果m的local stack cache超标

// 先归还一部分(一半)至全局stack cache pool

if c.stackcache[order].size >= _StackCacheSize {

stackcacherelease(c, order)

}

x.ptr().next = c.stackcache[order].list

c.stackcache[order].list = x

c.stackcache[order].size += n

}

// 直接归还,暂时不考虑

} else {

s := mHeap_Lookup(&mheap_, v)

if s.state != _MSpanStack {

println(hex(s.start<<_PageShift), v)

throw("bad span state")

}

if gcphase == _GCoff {

// Free the stack immediately if we're

// sweeping.

mHeap_FreeStack(&mheap_, s)

} else {

// Otherwise, add it to a list of stack spans

// to be freed at the end of GC.

//

// TODO(austin): Make it possible to re-use

// these spans as stacks, like we do for small

// stack spans. (See issue #11466.)

lock(&stackpoolmu)

mSpanList_Insert(&stackFreeQueue, s)

unlock(&stackpoolmu)

}

}

}

stackpoolfree()

// Adds stack x to the free pool. Must be called with stackpoolmu held.

func stackpoolfree(x gclinkptr, order uint8) {

// 查找该x所属的mspan

s := mHeap_Lookup(&mheap_, (unsafe.Pointer)(x))

if s.state != _MSpanStack {

throw("freeing stack not in a stack span")

}

// 如果s之前的freelist为空,已经从pool的空闲span链表中摘除

// 现在归还了,说明有空闲空间了,将其重新添加到pool的空闲span链表中

if s.freelist.ptr() == nil {

mSpanList_Insert(&stackpool[order], s)

}

x.ptr().next = s.freelist

s.freelist = x

s.ref--

// s.ref==0说明span的freelist所有栈空间全部被释放

// 此时就可以将该span的空间释放

if gcphase == _GCoff && s.ref == 0 {

// Span is completely free. Return it to the heap

// immediately if we're sweeping.

//

// If GC is active, we delay the free until the end of

// GC to avoid the following type of situation:

//

// 1) GC starts, scans a SudoG but does not yet mark the SudoG.elem pointer

// 2) The stack that pointer points to is copied

// 3) The old stack is freed

// 4) The containing span is marked free

// 5) GC attempts to mark the SudoG.elem pointer. The

// marking fails because the pointer looks like a

// pointer into a free span.

//

// By not freeing, we prevent step #4 until GC is done.

mSpanList_Remove(s)

s.freelist = 0

mHeap_FreeStack(&mheap_, s)

}

}